Okay so as part of being a Linux sysadmin, I’ve run into the need to rebuild a software RAID (mdadm) array. This process usually takes forever, and in an effort to make sure it won’t take any longer than necessary, I usually leave the volume in question unmounted during the resync/reshape. I know, I know, you can read/write to an array during the process safely, otherwise anaconda (the most superior Linux installer) wouldn’t be able to move forward with the install for a long while. But I still like the reshape to go faster. I’d rather not use the system for an hour and have it be perfectly fast afterwards than have the machine crawl along all day.

Long-winded ideals speech beside, I want to monitor how long the rebuild is going to take in quasi-realtime. Details of the software raid can be found in the file /proc/mdstat. You can cat this file once in a while to see the progress. But that wasn’t ever good enough, too much of that whole keyboard-button-pushing thing in order to get the statistics to update. So I would usually type this in real quick:

while [ 1 ]; do clear; cat /proc/mdstat ; sleep 1; done

It’s just a simple loop that keeps the information fresh on the screen. Well boy did I just find out something today that helps greatly with that dilemma. Enter the Linux “watch” command, courtesy of the procps package. The watch command automatically updates the information on the screen in the command that follows the binary on the shell. For example, the command:

watch cat /proc/mdstat

will update the file exactly like the loop does. But watch does it even better, as it prints a clock in the corner and lets you know how often it is updating. Plus, watch doesn’t make the screen flicker for a millisecond like the shell clear does. The default delay in updating is 2.0 seconds, but you can change that easily via the -n flag. For example, to update every second, as in the custom loop above, try the command:

watch -n 1 cat /proc/mdstat

and be amazed. It would seem at first glance that the delay can be set as low as 0.1 seconds. Pretty neat. It’s an interesting tool, and certainly one that is very useful for these sorts of things. Check out the man page for more information on the command.

Quick tip. You’re working on setting up a server late at night. It’s dark out. Your roommate is sleeping. And every time you push the damn tab button, the computer makes a beeping sound. WHAT THE HELL?!

Silence the PC Speaker with “rmmod pcspkr”. Make it loud again with “modprobe pcspkr”.

Your roommate will thank me for this… whether he/she knows it or not 😀

Here’s a tip to improve the speed and efficiency of your C programs: BIT OPERATIONS ARE MUCH FASTER THAN BYTE OPERATIONS!!! Let’s say you want to multiply a variable by 2 in your C program. Well, you could do:

variable = variable * 2;

But let’s consider that in order to perform this operation, the system will need to deal with at least two pieces of data: the variable, and the constant 2. It would be nice if there were a way to perform some of these basic math operations without having to deal with multiple data points. Alas, there is:

variable << 1;

This simply shifts the bits one to the left. Why does this work? Let's say:

00000100 = 4

Shifting the bits one to the left yields:

00001000 = 8

This is simply an underlying principle of the binary system that computers use. This tip also works in reverse - you don't need to ever divide by two, either! You can just bitshift one to the right! Now, I don't have any empirical evidence at the moment to support this claim numerically, but it's true, the bitshift operations will always run much faster than the numerical operation. The proof is simply logical. Now here's the nugget of the day, as found on an interwebs forum, allegedly from the original K&R C book. It's a simple function to determine if a number is a power of two:

if(!(phys_mem_size & (phys_mem_size - 1)))

{ printf("This number is a power of two."); }

How's it work? Consider that a power of two will only have one bit set. It follows, therefore, that subtracting one from it will set that bit to zero, and every bit after it equal to one. Taking the binary and of these values results in something like:

16 = 00010000

15 = 00001111

& = 00000000

The important thing is to reverse the statement, that is, if NOT this and that, since if it is a power of two, a zero will be returned, which is false in C. There's plenty of other operations that can be done in C that can take advantage of the bit-patterns and power-of-two-nature of computers. Such ops can be performed on the mantissa of floats sometimes to produce interesting results that are mathematically correct. Do yourself and your computer a favour - think of this sort of low-level trickery first when trying to solve a problem while programming, it'll help you produce cleaner, faster, and more efficient code, not to mention making it look more professional.

Here’s an interesting concept, and one that’s, rather surprisingly, widely unknown. It’s called tetration, a concept brought up so rarely that most computer dictionaries don’t even recognize it as being a word. I was organizing my filesystem hierarchy today, and found this old paper I wrote for my Discrete Mathematics class. I gave it a quick re-read, and the concepts still amaze me. It is truly impossible to ignore the beauty of mathematics, especially after seeing something like this.

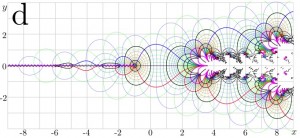

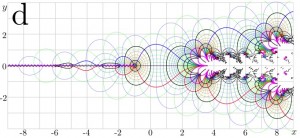

Drawing of the analytic extension f = F(x + iy) of tetration to the complex plane.

The basic premise of tetration is simply a continuation of what has already been examined since, well, probably kindergarten. Everyone knows what addition, subtraction, multiplication, and division are. But consider this concept for a moment: multiplication is just repeated addition, exponentiation (x^y) is just repeated multiplication, and tetration, well, that’s just repeated exponentiation! It has other names as well. Since it’s concept looks something like a^b^b^b^b, it has been called a power-tower. It is also interesting to note that we’ve all seen an equation somewhere or another that may have looked something like: a^b^2. Now, the simplest way to solve this would be to start by squaring b, then raising a to that power. But if b were to resolve to a value of two – well, that’s tetration! It’s pretty neat to see how tetration can be often be used, yet is relatively unknown.

And here’s where it starts to get even stickier – it doesn’t stop at tetration! If there’s an operation n, that means that there is an operation n+1 which formulates itself as the repeated nth operation. So that means there’s an infinite number of operations that can be performed on numbers. It’s also crazy to think that there’s an operation at infinity, so that given any non-zero inputs, an infinite value would be returned. There’s a lot of power to be found in tetration and nth-term iterative operators, and oddly enough, if I had to guess that the problem had a solution, I would say this would be a good starting point for which to solve the [Riemann hypothesis].This is some crazy stuff here, and if it’ll help anyone to further understand, I’ve uploaded my findings on tetration to this server for download [here]. Enjoy!

So yeah, I think that I will use this little corner of webspace as my own. Hopefully the posts won’t be absolute rubbish, either. I found this template somewhere online. I kind of like it, let me know if you like it too, please.

Make sure to check back somewhat often – I’ll update it as often as I can, when time permits. That’ll probably happen more over this coming summer, when I have some interesting things to talk about, and spare time.

Enjoy.

Filed under:

Uncategorized